[This is Part One of a multi-post series on how my team is combining Robocopy, PowerShell, SQL, and Cold Fusion to track the metrics we generate when we use Robocopy for replicating data within our file server environment.]

Good day All

This year in my workgroup we are focusing on capturing metrics on the tasks that we do, and one thing that we do a great amount of is file replication. Whether we are replacing a server, and need to move all of the data to another server, or are simply load balancing the available space on a server’s data disks, we use Robocopy for the data replication. Robocopy has a host of features that make it our default copy tool, but the one that relates to our discussion today is its logging capability.

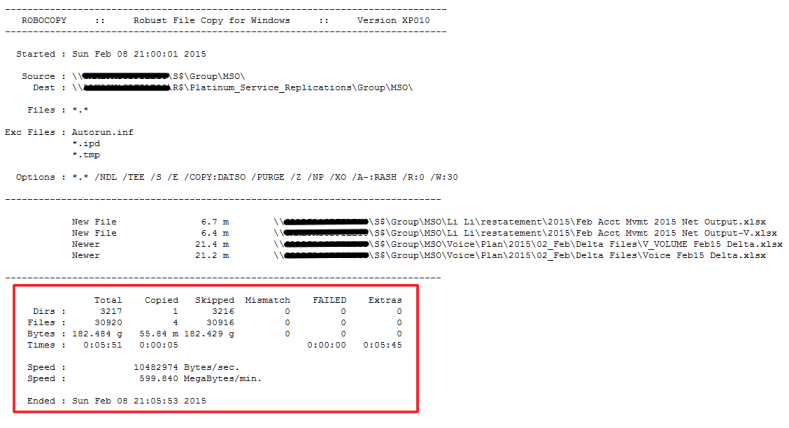

As stated above, part of the metrics focus for the year is to capture the amount of data the we are replicating. The primary method for capturing replication metrics is to parse the Robocopy logs we generate when we perform our daily replications. Using Regular-Expressions, and PowerShell, this blog post will examine a method for extracting the text needed for our metrics. The script file being discussed in this post is called Scan-RobocopyLogs.ps1; it and the test Robocopy log file we are scanning can be downloaded from my Box folder here.To get started here is an image of a Robocopy log file. In this particular screenshot very little new data was discovered, so the amount of files shown as moved is pretty small.

A few notes on the above image:

- I have renamed the names of the servers in the text file to keep anybody in my company from getting nervous. So it is not an original Robocopy output file, but the values are still valid.

- You will see a total of four files marked as either ‘New File’ or ‘Newer’. On a busy day, or a first time replication, many thousands of files could be potentially displayed. This is an incremental replication, and only those files that have been added or changed are actually copied.

- Robocopy summary information is contained in the red rectangle that I have overlaid onto the image of the log file. This red bordered area will be the primary focus of the metrics parsing.

In the red rectangle the data is presented in rows called Dirs, Files, Bytes, Times, Speed, and Ended. Also you should notice several columns labelled Total, Copied, Skipped, Mismatch, Failed, and Extras. Notice the number of Copied-Files is 4, and the number of of Skipped-Files is 30916. If any files had not been successfully replicated they would have been listed in the Failed-Files number. So that is the general description of the log file. Now on to the parsing.

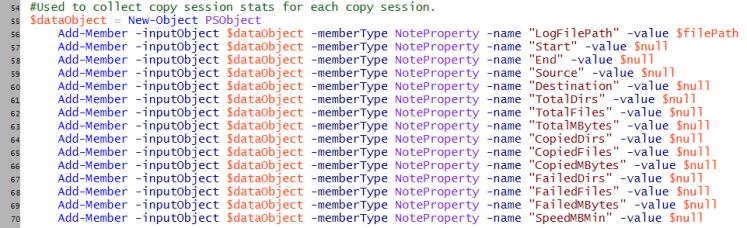

For each Robocopy log file that is scanned a data object will be created. The data object will be used to hold the various metrics values that we capture using Regular-Expression matching. He is a screenshot of the data object properties:

Notice that we are capturing a wide variety of data from the text in the log file. As we convert the data into objects it becomes much more useful than plain text: now we can perform mathematical functions, and use the data to feed database tables. So how do we capture and convert this text from a log file into objects? Regular-Expressions!

A lot of information is available on Regular-Expressions (known here after as RegEx). There are many methods to perform the type of RegEx filtering we are doing here. As I write scripts for our team at work, I try to be verbose in the way I do things to make the script readily understandable for all team members. So, the following can be done in a more succinct manner, but I chose this implementation for the sake of clarity. If you downloaded the file previously using the Box link provided above, you can follow along with this general line by line discussion.

Lines 1 – 10: The parameter input for the script. Only 1 parameter is used, -FilePath provides the full path to the log file being scanned.

Lines 15 – 42: Set up the local folder values, and setup the error logging mechanism.

Lines 45 – 51: Set up global variables for use in the script.

Lines 53 – 69: Create an object to hold the values parsed from the text read from the log file.

Lines 71: Test to see if the path to the log file is valid.

Lines 73 – 92: A function to clear the contents of the parsed text data object.

Lines 94 – 110: Set the values in the data object to ‘Logfile Corrupted’. This will be used when a log file has been found to be badly formatted. This will occur when a log file is being written to simultaneously by multiple Robocopy sessions.

Lines 112: Read the contents of the log file, and store them into $roboCopyStr.

Lines 113 – 276: Loop to read through each line ($line) of the value stored in $roboCopyStr. When the text in $line matches various RegEx statements it will be assigned as a value for a property in the data object.

The following table is a summary of the RegEx matches used in this script. They are accompanied by an example of the type of text they are looking for, and the variable within the script that is affected. The ‘Regular Expression’ column in Table 1 should be compared to Lines 113 – 276 of the script.

| Regular Expression | Matched Text | Affected DataObject Property |

| ‘^\s+Started\s:.*’ | Started : Sun Feb 08 21:00:01 2015 | $dataObject.Start |

| ‘^\s+Source\s:.*\\\\.*\\[A-Z]\$.*’ | SourceServer\S$ | $sourceServer / $sourceDrive |

| ‘^\s+Dest\s:.*\\\\.*\\[A-Z]\$.*’ | TargetServer\T$ | $targetServer / $targetDrive |

| ‘^\s+Ended\s:.*’ | Ended : Sun Feb 08 21:05:53 2015l | $dataObject.End |

| ‘^\s+Source\s:.*’ | Source : \\SourceServer\S$\… | $dataObject.Source |

| ‘^\s+Dest\s:.*’ | Dest : \\TargetServer\T$\… | $dataObject.Destination/td> |

| ‘^s*Replicating_SysAdmin’ | Replicating_SysAdmin: PP1071 | $replicatingSysAdmin |

| ‘^\s+Dirs\s:\s*’ | Dirs : 3217 1 3216 … | $dataObject.TotalDirs = $dirs[0] $dataObject.CopiedDirs =$dirs[1] $dataObject.FailedDirs =$dirs[4] |

| ‘^\s+Files\s:\s[^*]’ | Files : 30920 4 30916 … | $dataObject.TotalFiles = $files[0] $dataObject.CopiedFiles = $files[1] $dataObject.FailedFiles = $files[4] |

| ‘^\s+Bytes\s:\s*’ | Example: Bytes : 1.607 g 0 … | $dataObject.TotalMBytes = $tempByteArray[0] $dataObject.CopiedMBytes = $tempByteArray[1] $dataObject.FailedMBytes = $tempByteArray[4] |

| ‘^\s+Speed\s:.*min.$’ | Dest : \\TaargetServer\R$\Pl… | $dataObject.Destination |

Table 1: RegExs and Their Affect in This Script.

Line 278: When each line of $roboCopyStr has been read the For loop is exited. The each parsed line of the read text that has matched one of the RegEx expressions has been stored into the data object $dataColl, or one of the other variables: $sourceServer,$sourceDrive,$targetServer,$targetDrive, and $replicatingSysAdmin.

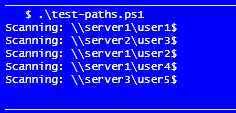

Here is a sample run of the script:

.\scan-RobocopyLogs.ps1 -filePath .\150208_TargetServer_S_MSO_R.TXT

Looking at the output of Fig 3, you can see that when we run the script it provides several valuable pieces of information that were parsed from the log file. The output from this script is intended to be used as the return data from a call in another script.

That is all for now. Up next will be:

“Capturing Robocopy Metrics: Setting Up the Replication Log”

Thank you for reading.

Have a great day.

Patrick